This is something that has bothered me a lot over the last few years and the last few companies. Most of my original classmates who specialised in Civil or Mechanical Engineering take CPD as a given. Whatever company they went to would be expected to provide CPD for them in a structured way, and to support them in their progress towards the C.Eng accreditation. The company would be expected to provide mentoring, and to send employees on accredited CPD courses (like those run by the IEI).

But it wasn’t just civil and mechanical engineers, it was in all the professions. Barristers (since 2005) are required to undertake CPD work. Doctors (since 2007) are required to undertake 50 hours a year (or 250 over 5 years) of CPD work. Teachers are going to make it compulsary in the near future. Accountants have to do it. Auctioneers do it.

So what about computer engineers and programmers and the IT sector as a whole, Ireland’s second-largest industry and the claimed saviour of our entire economy, an industry characterised by continual change and with the shortest period to technical obsolescence of any of our industries, where CPD is so obviously needed?

Personally, I can say that none of the companies I ever worked for (and granted, I’ve not racked up several decades of wide-ranging experience yet) were involved in a structured way with CPD. In fact, none of them ever mentioned it at all. The closest I saw in the last seven or eight years was when one company (after much lobbying by the coding team) begrudgingly agreed to buy some reference books from amazon.com which the coders could use (and take home if they signed for it, and it was someone’s job to track who had what book). It was pitiful – they were using time from one coder (a commodity they usually charged clients around €100-200 per hour for) in order to track which of their employees had a book worth maybe €30, which they were trying to use to improve their skills (something the company would only benefit from).

At best, in companies like that, CPD is an individual responsibility. Courses, conferences, seminars – they have to happen on your time, whether it be a weekend or a holiday. Books, admission fees, subscription fees, they become a living expense shared only by others in our field. And given our industry’s counterproductive love for masochistically long hours, you’re talking about working ten hours a day during a slow week, then trying to grab an hour here or there to read any CPD material you can, and that’s never light reading. Small wonder then, that as far back as Peopleware, it’s been known that the industry average for CPD work in IT is not even one book. Not one book a month or one book a year, but not one book, ever. In fact, just by reading this blog, you’re one of the technical elite (not that this blog is special — if you’re reading any blog on programming, you’re one of an elite group in our industry).

Worse yet, in several of the places I worked in in the last few years, asking for CPD support would have been a black mark against you; it would have been seen as an admission of incompetence and nothing more. The attitude was, effectively, that you should have learnt everything in college, and now it was time to stop with the time-wasting of learning and get on with billable hours. CPD was something you did at home and didn’t mention at work. Supporting CPD in those places was seen as the company spending money to improve the employees’ CVs so they could flee elsewhere. Oddly enough, not supporting CPD (and generally treating employees like second class citizens) often prompted that flight, leaving the company to scramble to replace the loss of expertise and data that such a moving-on represented to them.

The really depressing part of all of this is that studies have shown that CPD benefits the company dramatically. It’s well-known. Even Fred Brook’s classic, No Silver Bullet, mentions CPD mentoring as a vital step in finding great developers. It’s a primary difference between the top 100 companies in a field and the field as a whole, when done properly and assessed correctly. But in Ireland, only 10% of companies are involved in CPD to a high level, and only 40% even get involved at any level at all. Looking at the IEI’s list of participating organisations in its CPD programme is telling – in the Tech section of the list there are only nine companies (out of a total of 94) and most of those are large multinationals (BT, IBM, Intel and so on). Of our native SME sector, there are, basicly, none.

Nor are there many courses for the IT sector in the IEI’s lineup of CPD courses. There are non-technical courses in common with other sectors of course – Project Management, Communications and so forth; but for technical courses there’s only one, on iPhone apps.

Nor is there much in the way of third-level support, at least from my limited vantage point. I certainly never encountered any mention of CPD during my undergrad degree, nor the C.Eng qualification. Some universities like DIT are now running CPD courses with the IEI, so hopefully this is changing.

But the companies are where this all has to start. Why do we never see recruitment ads looking for specific CPD accreditations? Why is there such poor support for the C.Eng qualification? Why do so few small shops go for the IEI CPD Accredited Employer standard? As I said on this thread on theboards.ie Development forum, if a company is not willing to take on CPD in a proper manner, it has no business complaining about the standard of potential hires, because it is part of the problem. And a critical part, at that.

I think myself that in the startup sector of our industry especially, this is a side-effect of the buy-in to the cult of the ‘rock star developer’. Watch TWISt sometime, especially the DHH interview, and ask yourself — when so much focus is put on being one of the top developers around — when we don’t have any objective way to measure how good someone in that field is, but rely instead on how often people are talking about them — would people this arrogant and unprofessional ever take part in a process like CPD which is based on the idea that you don’t know everything? Would anyone looking for a new role ever mention CPD to them? And how do we expect companies will treat us, when we publicly espouse such unprofessional viewpoints?

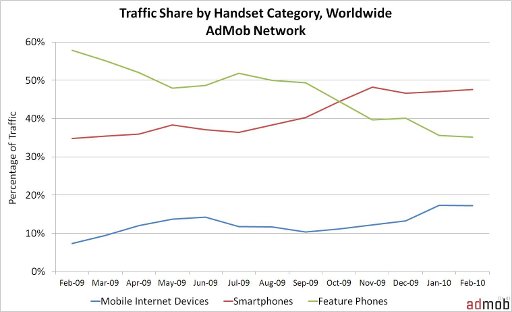

Granted, only the more expensive model has 3G, but you know that’s not going to last – Apple has a pattern with their hardware which tells us that however slick the iPad is today, it’s only going to be refined and become more compelling as a device. And meanwhile the iPad clones like the

Granted, only the more expensive model has 3G, but you know that’s not going to last – Apple has a pattern with their hardware which tells us that however slick the iPad is today, it’s only going to be refined and become more compelling as a device. And meanwhile the iPad clones like the